Let’s say you have a table of the format:

object_id | timestamp | old_status | new_status

32049 | 2018-02-01 14:32:22 | TRUE | FALSE

32049 | 2018-03-02 12:30:20 | FALSE | TRUE

32049 | 2018-03-04 18:30:50 | TRUE | FALSE

52053 | 2019-02-09 00:30:50 | FALSE | TRUE

52053 | 2019-02-09 00:30:53 | TRUE | FALSE

52053 | 2019-02-10 16:30:56 | FALSE | TRUEWhere each entry, for simplicity’s sake, is one change in boolean status for the object: TRUE | FALSE or FALSE | TRUE. This is a fairly common history table that you’d find in the database of many applications, for example a profile settings history with a search engine visibility toggle.

Let’s say that you want to build a lookup table in your warehouse so that you can easily discover whether, on any given day, the toggle in question is set to a specific state, in this case TRUE. This happens to be the situation we found ourselves in at WayUp in order to make it easy to know whether a job was active during a given period.

This is the output we’re going for, where every row is a date where the object is active. If the object is not active during that date, there would be no entry for it.

For the example above, our work should yield the following. Since object 32053 was set to true on 2/10/2019, it is currently also true on 2/12/2019. We also will assume that both objects were created on 1/31/2018.

object_id | date

32049 | 2018-01-31 (creation as active)

32049 | 2018-02-01

32049 | 2018-03-02

32049 | 2018-03-03

32049 | 2018-03-04

52053 | 2019-02-09

52053 | 2019-02-10

52053 | 2019-02-11

52053 | 2019-02-12 (today)How do you do it?

It’s a deceptively hard problem, because the result we’re going for is a lookup table where we need dates in between, and inclusive of, the toggles that are set to TRUE. We also need dates after the last toggle was set to TRUE up through the current date. If the history table doesn’t include the initial state the object was created in (which is very often the case), we will need to handle that case as well.

Step One: Build a Date-Based History Table

We’ll first need the history of this object by date, because that’s the final format we’ll be storing the table in. (Even if your final use case for this lookup will be rollups by month or quarter, if you have it broken out by date, it will make rollups easy using SELECT DISTINCT across any time period.)

Let’s go back to our original sample table.

object_id | timestamp | old_status | new_status

32049 | 2018-02-01 14:32:22 | TRUE | FALSE

32049 | 2018-03-02 12:30:20 | FALSE | TRUE

32049 | 2018-03-04 18:30:50 | TRUE | FALSE

52053 | 2019-02-09 00:30:50 | FALSE | TRUE

52053 | 2019-02-09 00:30:53 | TRUE | FALSE

52053 | 2019-02-10 16:30:56 | FALSE | TRUE

The first thing we do, is append the current status of the object at the current time. The reason is, we need to eventually add active date rows for objects which were previously set to active, and thus are still active today. This can be done with a simple union, with a NULL for new_status.

object_id | timestamp | old_status | new_status

32049 | 2018-02-01 14:32:22 | TRUE | FALSE

32049 | 2018-03-02 12:30:20 | FALSE | TRUE

32049 | 2018-03-04 18:30:50 | TRUE | FALSE

32049 | 2019-02-12 23:59:43 (now) | FALSE | NULL

52053 | 2019-02-09 00:30:50 | FALSE | TRUE

52053 | 2019-02-09 00:30:53 | TRUE | FALSE

52053 | 2019-02-10 16:30:56 | FALSE | TRUE

52053 | 2019-02-12 23:59:43 (now) | TRUE | NULLNow it’s easy to build a day-by-day history table using some window functions. Remember the DISTINCT, otherwise you will get duplicate rows.

SELECT DISTINCT

object_id

, DATE(timestamp) AS date

, FIRST_VALUE(old_status) OVER(PARTITION BY object_id,DATE(timestamp) ORDER BY timestamp ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS old_status

, LAST_VALUE(new_status) OVER(PARTITION BY object_id,DATE(timestamp) ORDER BY timestamp ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS new_status

FROM status_historyThe output will give you the first old status and last new status of each date. Note object #52053 on 2/9: although it was active on this date, it started and ended as inactive. At some point, we’ll need to remember to account for this use case.

object_id | date | old_status | new_status

32049 | 2018-02-01 | TRUE | FALSE

32049 | 2018-03-02 | FALSE | TRUE

32049 | 2018-03-04 | TRUE | FALSE

32049 | 2019-02-12 | FALSE | NULL

52053 | 2019-02-09 | FALSE | FALSE

52053 | 2019-02-10 | FALSE | TRUE

52053 | 2019-02-12 | TRUE | NULLStep Two: Prepare the Join

You can begin to see now what we’ll need to do. We’ll need to insert new rows for each object between every row where new_status is TRUE and old_status is TRUE. These rows will need to be bounded by date. We already have an upper bound: the “date” column. But we need to add a lower bound as well–which means we need to know for each row what the previous date was that the toggle was changed. This can be accomplished with the LAG() window function.

You may realize at this point we have one more problem. For the first entry for each object, there will be no previous date: it will be NULL. This gives us an opportunity to address another use case: when the objects are created TRUE without the history table being altered. So we’ll replace that first prev_toggle cell for each object with the creation date for each object. We’re also going to offset this date by one. You’ll see why in the next step.

SELECT

object_id

, date

, old_status

, new_status

, COALESCE(

LAG(date,1) OVER(PARTITION BY object_id ORDER BY date)

, DATE(object.created_at) - INTERVAL '1 DAY'

) AS prev_toggle

FROM daily_status

JOIN object USING (object_id)The output is ready now to be easily transformed using a simple join.

object_id | date | old_status | new_status | prev_toggle

32049 | 2018-02-01 | TRUE | FALSE | 2018-01-30

32049 | 2018-03-02 | FALSE | TRUE | 2018-02-01

32049 | 2018-03-04 | TRUE | FALSE | 2018-03-02

32049 | 2019-02-12 | FALSE | NULL | 2018-03-04

52053 | 2019-02-09 | FALSE | FALSE | 2018-01-30

52053 | 2019-02-10 | FALSE | TRUE | 2019-02-09

52053 | 2019-02-12 | TRUE | NULL | 2019-02-10Step Three: Join and Union

We’re now going to do our join in two steps. First, we’re going to use a cross-join to add all the dates before the rows where old_status is TRUE. We know for a fact that every one of these dates is active.

object_id | date | old_status | new_status | prev_toggle

32049 | 2018-01-31 < ======== HERE

32049 | 2018-02-01 | TRUE | FALSE | 2018-01-30

32049 | 2018-03-02 | FALSE | TRUE | 2018-02-01

32049 | 2018-03-03 < ======== HERE

32049 | 2018-03-04 | TRUE | FALSE | 2018-03-02

32049 | 2019-02-12 | FALSE | NULL | 2018-03-04

52053 | 2019-02-09 | FALSE | FALSE | 2018-01-30

52053 | 2019-02-10 | FALSE | TRUE | 2019-02-09

52053 | 2018-02-11 < ======== HERE

52053 | 2019-02-12 | TRUE | NULL | 2019-02-10Then, we’re going to append all the dates in which a toggle was set to TRUE at some point. For this we’ll go back to our original status change table.

For this join, we’ll need a list of all objects cross-joined against all dates. This is easy using an all_dates table you can generate with a csv for this purpose.

SELECT

object_id

, date

FROM object

JOIN all_dates

ON all_dates.date >= DATE(object.created_at)

AND all_dates.date <= DATE(GETDATE())This will yield a potentially massive table even truncating the dates as shown above. It should perform fine up to a couple million objects though–OK for most practical purposes.

object_id | date

32049 | 2018-01-31

32049 | 2018-02-01

32049 | 2018-02-02

... | ...

32049 | 2019-02-12

52053 | 2018-01-31

52053 | 2018-02-01

52053 | 2018-02-02

... | ...

52053 | 2019-02-12Now, the join and the union can be done inside the same query. Remember we’re using the JOIN to create additional rows in before status_old is TRUE, using the prev_toggle field as an upper bound. The reason we had offset the creation date by one, is so we could fill in rows between creation date and a TRUE toggle, using the same bounded method. The UNION is used to append those dates in which we know the toggle to be active, and remove duplicates.

SELECT

daily_status_with_prev.object_id

, all_time_objects.date AS active_date

FROM daily_status_with_prev

JOIN all_time_objects ON

daily_status_with_prev.old_status IS TRUE

AND all_time_objects.date < daily_status_with_prev.date

AND all_time_objects.date > daily_status_with_prev.prev_toggle

AND all_time_objects.object_id = daily_status_with_prev.object_id

UNION

SELECT

status_history.object_id

, DATE(status_history.timestamp) AS active_date

FROM status_history

WHERE old_status IS TRUE

OR new_status IS TRUEThe result is exactly what we were aiming for! One row per date each object was active.

object_id | date

32049 | 2018-01-31

32049 | 2018-02-01

32049 | 2018-03-02

32049 | 2018-03-03

32049 | 2018-03-04

52053 | 2019-02-09

52053 | 2019-02-10

52053 | 2019-02-11

52053 | 2019-02-12Used correctly, this new table is extremely powerful for analytics and in rollups. It’s hard to make but well worth it!

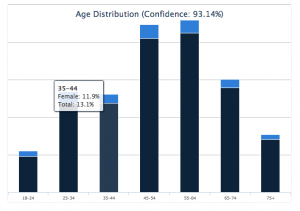

In the last couple weeks I have been pouring over data sets and trying different formulas to find the best way to break down a list of seemingly random name data into digestible information. The resulting app allows anyone to upload their mailing lists and see who’s in them, and in perhaps the coolest feature, they can segment their list as well.

In the last couple weeks I have been pouring over data sets and trying different formulas to find the best way to break down a list of seemingly random name data into digestible information. The resulting app allows anyone to upload their mailing lists and see who’s in them, and in perhaps the coolest feature, they can segment their list as well.